[ad_1]

Before Google’s latest I/O event could even begin, the tech giant was already trying to stake a claim for its new AI. As music artist Dan Deacon played trippy, chip tune-esque electronic vocals to the gathered crowd, the company displayed the work of its latest text-to-video AI model with a psychedelic slideshow featuring, people in office settings, a trip through space, and mutating ducks surrounded by mumbling lips.

The spectacle was all to show that Google is bringing out the big guns for 2023, taking its biggest swing yet in the ongoing AI fight. At Google I/O, the company displayed a host of new internal and public-facing AI deployments. CEO Sundar Pichai said the company wanted to make AI helpful “for everyone.”

What this really means is—simply—Google plans to stick some form of AI into an increasing number of user-facing software products and apps on its docket.

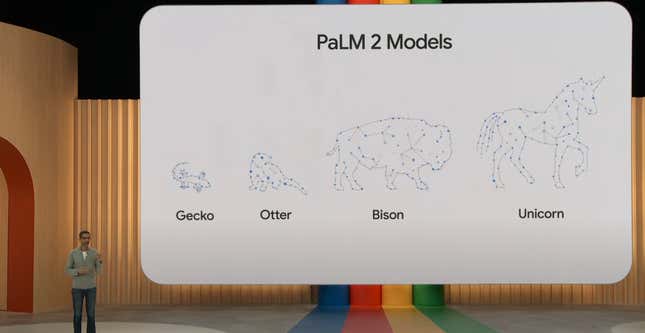

Google’s sequel to its language model, called PaLM 2

Google unveiled its latest large language model that’s supposed to kick its AI chatbots and other text-based services into high gear. The company said this new LLM is trained on more than 100 languages and has strong coding, mathematics, and creative writing capabilities.

The company said there are four different versions of PaLM, with the smallest “Gecko” version small enough to work on mobile devices. Pichai showed off one example where a user asked PaLM 2 to give comments on a line of code to another developer while translating it into Korean. PaLM will be available in a preview sometime later this year.

PaLM 2 is a continuation of 2022’s PaLM and March’s PaLM-E multimodal model already released earlier this year. In addition, its limited medical-based LLM caled Med-PaLM 2 is supposed to accurately answer medical questions. Google claimed this system achieved 85% accuracy on the US Medical Licensing Examination. Though there’s still enormous ethical questions to work out with AI in the medical field, Pichai said Google hopes Med-PaLM will be able to identify medical diagnoses by looking at images like an X-ray.

Google’s Bard upgrades

Google’s “experiment” for conversational AI has gotten some major upgrades, according to Google. It’s now running on PaLM 2 and has a dark mode, but beyond that, Sissie Hsiao, the VP for Google Assistant and Bard, said the team has been making “rapid improvements” to Bard’s capabilities. She specifically cited its ability to code or debug programs, since it’s now trained on more than 20 programing languages.

Google announced it’s finally removing the waitlist for its Bard AI, letting it out into the open in more than 180 countries. It should also work in both Japanese and Korean, and Hsiao said it should be able to accept around 40 languages “soon.”

Hsiao used an example where Bard creates a script in Python for doing a specific move in a game of Chess. The AI can also explain parts of its code and suggest additions to its base.

Bard can also integrate directly into Gmail, Sheets, and Docs, able to export text directly to those programs. Bard also uses Google Search to give images and descriptions in its responses.

The company is allowing for extensions from sites like Instacart, Indeed, Khan Academy, as well as many of its existing Google apps, making them all accessible to the Bard AI. Bard is also gaining connections to third-party apps, including Adobe Firefly AI image generator, allow it to generate images using that external system.

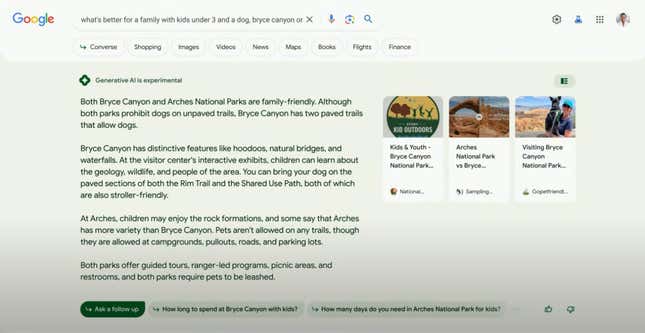

AI in Google Search

Google’s bread and butter, Search, is going to integrate the company’s AI to create a “snapshot” to users’ queries. These AI-generated summaries appear at the top of a page on the browser version of Google Search, while a set of links relating to the AI-generated text appear on the right.

Users can click to expand that view, where each line of text gets its own set of links exploring the topic in greater detail. The company said this can act as a “jumping off point” for users and their searches while still giving them access to official sources as well as users’ blogs.

The AI snapshot also works when people search for products, as it displays prices and commentary on a search. For example, a search for “good bike for a 5 mile commute with hills” will generate a few bullet points about design and motor assistance, then rank a number of different brands based on those criteria.

The system also works on mobile, through a “Converse” tab sitting alongside Video, Images, News, and so on. On Android, the snapshot takes up most of the screen while the expanded search results are pushed down to the bottom. All of users’ previous prompts remain above, and users can scroll up to find previous results. Users who continue to scroll down on the page can see more links like a regular Search results.

Google is still calling this an “experiment” with AI in Search. The availability is limited, and there’s currently a waitlist on the new Search Labs platform for those who want to access this new AI in search and to use the AI to help with coding.

Even More AI in the Workspace apps, and AI will email for you

Google has already talked up adding AI content generation in Gmail and Google Docs, but now the company said it’s expanding the so-called “Workspace AI collaborator” to add even more generative capabilities in its cloud-based apps. These generative AI and “sidekick” features are being released on a limited basis, but will be handed out to a more broad userbase later this year.

Much like Microsoft announced with its own 365 apps earlier this year, Google’s adding generative AI into its office-style applications. Aparna Pappu, the VP of Google Workspace, said in addition to the limited deployment of a “help me write” feature on Gmail and Docs, the company is adding new AI features to several Workspace apps, including Slides and Sheets. The spreadsheet application can generate generic templates based on user prompts, such as a client list for a dog walking business.

Google announced its new “Help me write” feature, which uses an AI to generate a full email response based on previous emails. Users can then iterate on those emails to make them more or less elaborate. Pichai used the example of a user asking customer service for a refund on a canceled flight. This is on top of long-existing content generation abilities in Gmail like Smart Compose and Smart Reply.

For Slides, users can now use text-to-image generation to add to a slideshow. The generator creates multiple instances of an image, and users can further refine those prompts with different styles.

In Gmail, the AI “sidekick” can automatically summarize an email thread and can find cite earlier documents relating to that conversation. As far as Docs goes, the existing AI is getting more elaborate. It now suggests extra prompts based on generated text, and it can now add in AI-generated images directly within Docs. This also works within Slides, letting users generate speaker notes based on AI-generated summaries of each individual slide.

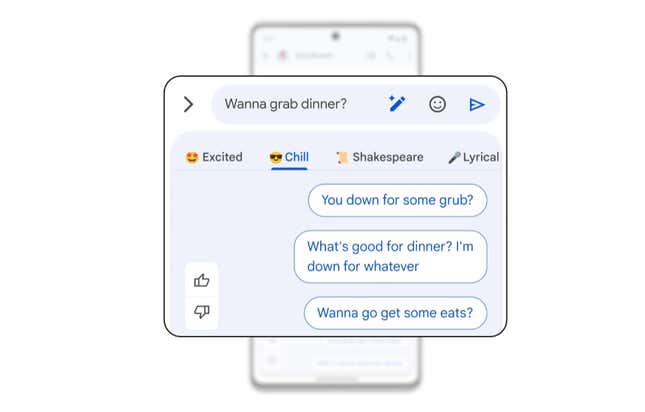

AI-generated wallpapers and AI-written texts

Google wants to let AI personalize every user’s experience on their Android mobile devices, even letting users use an AI to customize their phones’ wallpaper with AI-generated images.

The company revealed that it’s putting generative AI in its Messages app. This “Magic Compose” feature will suggest responses for users or even rewrite an entire message draft. The AI will let users chose between six styles, from “Excited” to “Chill” to even “Shakespeare. The Message AI is going into beta on users’ devices this summer.

Users can also ask the AI to generate an entire wallpaper from scratch using an in-built text-to-image diffusion model. Users are directed through “structured prompts” to create an image that could include, for example, a subject and an art style. The phone should adjust to the new color palette after users set their AI wallpaper. AI will also be able to generate custom reactive wallpapers using emoji, plus create “3D wallpapers” from existing photos that introduce parallax scrolling backgrounds that respond to your phone’s movement.

This is in addition to new screen customization options coming with the launch of Android 14 later this year. Using a new interface, users will be able to more fully edit and personalize the location, color, and theme of their lockscreen shortcuts and widgets.

New Magic Editor AI features in Google Photos

Google showcased new updates to its Magic Eraser feature, now calling a suite a AI-based editing features “Magic Editor.” Along with removing extraneous people, items, or other elements of a picture, the company said users will be able to transform objects inside each photo. The company showed how users might go about removing a bag strap or displacing an object, even the photo subject, to a different location in an image.

Photos users should also be able to punch up elements of an image, such as the lighting or the clouds in the sky. Google said these updates will help those elements blend “seamlessly” into the rest of the image.

All Pixel phones should gain early access to Magic Editor later this year, though the company explicitly said things might not be as clean-looking as their promotional videos.

AI Will Soon Make Google Maps Even More Immersive

With the help of Google’s new AI tools, Google Maps will soon be getting a much more elaborate Immersive View feature (which previously focused on landmarks) that relies on the billions of images that have been captured for Street View, as well as satellite imagery, to let users further explore a suggested route. It almost turns route planning into an interactive video game, giving cyclists a birds eye view of the bike lanes they’ll be relying on. Drivers will be able to preview intersections, and even the amount of parking available around their destination. The previewed route even shows what the weather will be like depending on the time of day, so you’ll not only know every last turn ahead of time, but what you should be wearing.

Immersive View for routes will be available in the coming months for cities including “Amsterdam, Berlin, Dublin, Florence, Las Vegas, London, Los Angeles, New York, Miami, Paris, Seattle, San Francisco, San Jose, Tokyo, and Venice.”

On the developer side of things, Google is introducing a new “Aerial View API” allowing app makers to add more immersive points-of-interest that include detailed, 3D overhead views allowing users to see not just the specific locale, but the area around it, too. One great use of this API is for realtor or apartment hunting apps, allowing prospective buyers or renters to see the surrounding neighborhood and what amenities are nearby such as parks, or even access to major roads.

Google is also making the “high-res, 3D imagery behind Google Earth” available to developers as part of an experimental new release allowing app makers to make similarly immersive experiences, without needing to source their own satellite imagery or 3D model generation. In a blog post today, Google suggests it would potentially be a great tool for tourism, allowing 3D maps of national parks to be easily created, complete with 3D mountains, trees, and rivers, allowing potential tourists to see a destination in more detail before planning a trip, or as a way to enhance the experience of an architectural landmark, by introducing interactive overlays with interesting facts about the site and its history.

Google is pushing its new API hard

Google announced its own PaLM API for sale in March this year. Always the jealous dancing partner, Google now wants to become as popular as OpenAI is with its own API for integrating AI into existing services.

Thomas Kurian, the CEO of Google Cloud, told the I/O crowd that the company is partnering with a number of enterprise services like Salesforce, but it’s also handing out its LLM to companies like Canva, Uber, and even Wendy’s through the Cloud enterprise side of Google’s business.

Canva already introduced some beta AI tools into its program earlier this year, but Wendy’s is just as well diving headfirst into AI technology with its chatbot-powered drive-thru brought thanks to Google’s Chirp language to text model.

The company also said its Codey code generation service is being advertised to other businesses, such as software-as-a-service company Replit, allowing users to ask the app for near-instantaneous code for a new program.

What does this mean for Google?

It’s no secret that Google has tilted its entire apparatus toward AI development, but the company has so far struggled to make a case for its refocus. Major researchers have left the company specifically to warn about the dangers of unchecked AI development. A widely-circulated internal document posted by a Google engineer proclaimed Google needs to focus less on proprietary AI development and more toward bringing open source development into the fold.

“We are approaching it boldly, with a sense of excitement… We’re doing this responsibly in a way that underscores the deep commitment we feel to get it right.” Pichai said during the keynote.

Wednesday’s announcements are supposed to revitalize the company’s image in the AI space. Last year, Google got beat to the punch by Microsoft and its Bing AI based on OpenAI’s GPT-4 language model. It’s own Bard AI cost the company both prestige and millions of dollars in stock price after its chatbot showcased an inaccuracy in its public debut.

Bard has been collectively considered a rather limp first reveal for the company that long spearheaded AI development. Now that the Bard AI is loose in the world, and with a completely new UI for Google Search incoming, the company wants to regain, or at least maintain, its dominance of the search space.

And with its emphasis on coding, Google is trying to prime its enterprise business partners to go PalM rather than GPT-4 or some other equivalent LLM for their business needs. The inevitable problem with language models generating code is that even the most sophisticated model will inevitably get something wrong. Google is doing what few others have, or are likely capable of doing, and is creating a scaled version of PaLM. As we mentioned, the “Gecko”-sized LLM can run directly on a phone, but the “Unicorn” version of PaLM is supposed to be much bigger. Google wants to advertise these language models to “support entire classes of products.”

Bigger AI models eat up more power, making them very expensive to train and run continuously, and that’s not to mention the amount of water needed to keep the machines cool. Even without the obvious climate impact of expanding these models, these heavy expenses mean Google will need to see a return on AI investment, or at least keep its numbers steady so it doesn’t see users or businesses fly over to Microsoft.

Want more of Gizmodo’s consumer electronics picks? Check out our guides to the best phones, best laptops, best cameras, best televisions, best printers, and best tablets. If you want to learn about the next big thing, see our guide to everything we know about the iPhone 15. Click here to save on the best deals of the day, courtesy of our friends at The Inventory.

[ad_2]

Source link